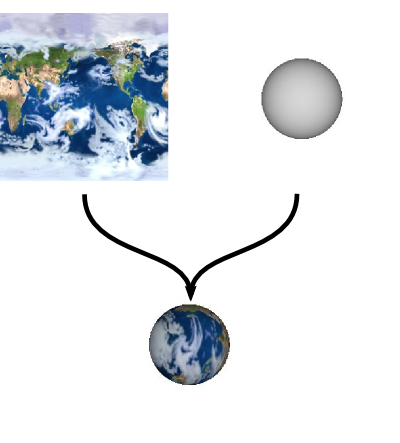

A texture in OpenGL is an image residing in video memory. Textures can be used for many purposes, but the most common is to texture map a mesh:

Textures can also be used for environment mapping (a way of faking reflections without raytracing), image-based lighting, normal mapping, bump mapping, and, more recently, as general sources of array-based data.

There are several different texture targets. The most commonly used one (as above) is GL_TEXTURE_2D, a 2D texture representing a simple image. Other targets allow for 1D textures (useful for storing a single dimensional array or gradient), 3D textures (for volumetric textures or arrays of images), cube textures (for environment mapping), depth textures (for shadowing) and rectangular textures (for efficient 2D-only graphics).

In this course we will only use 2D textures. 2D textures have restrictions on their size: both the width and height must be powers of 2 (i.e., 2, 4, 8, 16, 32, 64, ...). This restriction enables the texture mapping hardware to work far more efficiently than it could otherwise. (With the nvidia cards in the lab you can actually create textures of other sizes as well, but at a cost in performance).

To load an image into a texture requires you to call glTexImage2D with an array of raw pixel data. There are many libraries for loading and decoding standard image formats such as BMP, PNG and JPEG.

Because of the common need to load images libraries have been developed. One is SOIL - the Simple OpenGL Image Library, written in C. And a more recent version SOIL2.

The SOIL and SOIL2 libraries are both layered on top of another library: stb. For our simple purposes we only really need the stb image loader.

Because loading textures uses OpenGL calls, including returning an OpenGL id, it can only be called after initialising a GLUT window. Typically you will load all the textures you need after creating the window, but before calling glutMainLoop.

A simple example using stb to apply a texture to a quad may be found here. It has a function loadTexture which in turn calls the stb load function unsigned char* data = stbi_load(filename, &width, &height, &components, STBI_rgb); where the image width, height and components are set. The texture image data is pointed to by data.

There are many ways to apply a 2-dimensional image to a 3-dimensional mesh. Projective techniques work with arbitrary meshes and are conceptually similar to aiming a slide projector with the image at the mesh:

Usually though specific vertices of the mesh need to correspond to specific coordinates on the texture. A 2D texture has two axes, U and V:

The process of assigning UV coordinates to vertices in a mesh is called UV-mapping, and can be very time consuming for an artist. One way to think about UV-mapping is visualising "unwrapping" the 3D mesh to a flat piece of paper.

You can also generate UV coordinates procedurally, especially for simple objects such as spheres and cylinders. The following code generates a sphere with polar texture coordinates (it is the same code that was used to texture map the Earth, above):

Sphere *createSphere(float radius, int stacks, int slices)

{

Sphere *sphere = malloc(sizeof(Sphere));

sphere->stacks = stacks;

sphere->slices = slices;

sphere->radius = radius;

// Allocate arrays

sphere->vertices = calloc(stacks + 1, sizeof(Vertex*));

sphere->normals = calloc(stacks + 1, sizeof(Vertex*));

sphere->texes = calloc(stacks + 1, sizeof(TexCoord *));

for (int stack = 0; stack <= stacks; stack++) {

sphere->vertices[stack] = calloc(slices + 1, sizeof(Vertex));

sphere->normals[stack] = calloc(slices + 1, sizeof(Vertex));

sphere->texes[stack] = calloc(slices + 1, sizeof(TexCoord));

}

// Initialise arrays

for (int stack = 0; stack <= stacks; stack++) {

float theta = stack * M_PI / (float)stacks;

for (int slice = 0; slice <= slices; slice++) {

float phi = slice * 2 * M_PI / (float)slices;

// Vertex coordinates

Vertex v;

v.x = radius * sinf(theta) * cosf(phi);

v.z = radius * sinf(theta) * sinf(phi);

v.y = radius * cosf(theta);

sphere->vertices[stack][slice] = v;

// Texture coordinates

TexCoord tc;

tc.u = 1 - (float)slice / (float)slices,

tc.v = 1 - (float)stack / (float)stacks;

sphere->texes[stack][slice] = tc;

}

}

return sphere;

}

When drawing the mesh with glVertex3f, specify a texture coordinate (UV coordinate) for each vertex by calling glTexCoord2f first:

void drawTexturedSphere(Sphere *sphere)

{

glEnable(GL_TEXTURE_2D);

glBindTexture(GL_TEXTURE_2D, sphere->tex);

glBegin(GL_QUADS);

for (int stack = 0; stack < sphere->stacks; stack++) {

for (int slice = 0; slice < sphere->slices; slice++) {

glTexCoord2fv((GLfloat*)&sphere->texes[stack][slice]);

glVertex3fv((GLfloat*)&sphere->vertices[stack][slice]);

glTexCoord2fv((GLfloat*)&sphere->texes[stack+1][slice]);

glVertex3fv((GLfloat*)&sphere->vertices[stack+1][slice]);

glTexCoord2fv((GLfloat*)&sphere->texes[stack+1][slice+1]);

glVertex3fv((GLfloat*)&sphere->vertices[stack+1][slice+1]);

glTexCoord2fv((GLfloat*)&sphere->texes[stack][slice+1]);

glVertex3fv((GLfloat*)&sphere->vertices[stack][slice+1]);

}

}

glEnd();

glBindTexture(GL_TEXTURE_2D, 0);

}

To apply the texture a UV-mapped mesh, enable texturing and bind the appropriate texture name (the number returned from loadTexture):

glBindTeture(GL_TEXTURE_2D, earth_texture); glEnable(GL_TEXTURE_2D); draw_sphere();

A sprite is the traditional term given to a 2D image displayed in a game. In OpenGL sprites are rendered by texturing a quad. The following code draws a quad with UV coordinates:

glBegin(GL_QUADS); glTexCoord2f(0, 0); glVertex3f(0, 0, 0); glTexCoord2f(1, 0); glVertex3f(1, 0, 0); glTexCoord2f(1, 1); glVertex3f(1, 1, 0); glTexCoord2f(0, 1); glVertex3f(0, 1, 0); glEnd();

The UV coordinates cover the whole range 0 to 1, so if texture mapping is enabled the quad will be drawn with the image fully covering it.

A good use of sprites in a 3D game is for particle effects. More interesting effects can be achieved with sprite particles than just points:

The following image shows a wireframe view of the sprite particles. Notice that when viewed side-on the particles don't look very good:

To fix this, you need to re-orient each particle so that it's facing the viewer. You can do this with OpenGL matrix operations (reversing the camera rotation) or by calculating new vertex coordinates yourself with some trigonometry.

This technique of re-orienting the sprites is called billboarding. If you're having trouble getting this transform correct, try searching for "opengl billboarding" in Google; there will be plenty of examples.

Newer video cards (including those in the Sutherland lab) support texture mapping points directly. This is included in the point_sprite_ARB and point_sprite_NV extensions, and since OpenGL 1.5. (Windows users will need to jump through some hoops to get to the appropriate functions).

Google for "OpenGL point sprites" if you're interested in implementing particles in this manner.