Tutorial 5: Introduction to the use of shaders

Shaders are specialized programs to override fixed pipeline functionality and perform certain stages of the graphics pipeline.

A number of shader types exist in OpenGL, each operating in a different stage of the graphics pipeline: vertex, fragments, geometry and tessellation shaders. There are also compute shaders, but this tutorial will focus on the first two.

Exercises:

In this tutorial:

- Compile and run the Orange book brick and particle system examples.

- Compile a program to load, compile and use vertex and fragment shaders

Note that we use OpenGL Shading Language (GLSL) in this subject but others exist.

Build and run the Orange book brick and particle demos

- Extract the brick and particle examples from the Orange book. Build and run them. (

-lGLUmay need to be added to the makefile). - Look at particle.vert and identify the code that moves the particle. When might each particle respawn?

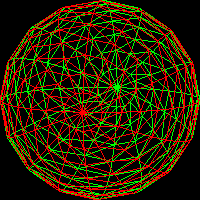

- For the brick demo change the model which is being textured to a sphere - follow the instructions given when the program runs.

- Modify the tessellation of the sphere (stacks and slices in

glutSolidSphere), reducing it. What difference do you see, in the highlight in particular, as tessellation is reduced?

Create your own shader

- Extract tute-shaders.zip, compile, run, experiment, read source.

- Enable the debugging mode which prints out the modelview and projection matrices. Most OpenGL state settings can be read using

glGet. Disable the mode. - Extract shaders.zip and add the source files to your makefile, or just compile and link all .c files from command line.

- Add a call to

getShaderininit()in tute-shaders.c, storing the return value in a variableshaderProgram. You will need to create the vertex and fragment shader programs (see below). - Add a call to glUseProgram to use the shader program - straight after getShader (and adding an interactive control later)

Create two files, shader.vert and shader.frag:

//shader.vert

void main(void)

{

// os - object space, es - eye space, cs - clip space

vec4 osVert = gl_Vertex;

vec4 esVert = gl_ModelViewMatrix * osVert;

vec4 csVert = gl_ProjectionMatrix * esVert;

gl_Position = csVert;

// Equivalent to: gl_Position = gl_ProjectionMatrix * gl_ModelViewMatrix * gl_Vertex

}

//shader.frag

void main (void)

{

gl_FragColor = vec4(1, 0, 0, 1);

}

The vertex shader transforms the vertex by both modelview and projection matrices. This is what allows you to move objects with glTranslatef and also applies the perspective projection from gluPerspective.

The fragment program will colour each pixel rendered red. gl_FragColor is the main output from fragment programs.

Run the program and make sure the geometry draws with its colour being generated in the fragment shader. Change the color to green to check it works as expected.

Now in the fragment shader set the color to the fragment depth:

//shader.frag

void main (void)

{

float depth = gl_FragCoord.z;

gl_FragColor = vec4(vec3(depth), 1);

}

You should see something like:

OpenGL maps the depth values into the range [0,1] by default. Does the image make sense?

Try setting the color to 1-depth. Does the resulting image also make sense?

Now add an interactive control for using shaders or the fixed pipeline: use render_state.useShaders to control whether shaders are used via the glUseProgram setting - a value of 0 disables use of shaders. Also add the appropriate code to update_renderstate and to the keyboard event handling.

Visual Debugging

- Deliberately put a syntax mistake in both shaders. What happens? Do you have to recompile the .c program when the shader changes?

- There is no direct equivalent to

printfin shaders. To debug them visual debugging must be used, e.g. setting colours. - Try and modify the fragment shader to set fragments red or green based on depth, e.g. red: 0 <= depth < 0.5 and green: 0.5 <= depth < 1.0. Use the interactive controls to switch polygon mode to wireframe (

GL_LINE) - experiment with back and front, and culling.

- Now explore creating an image using data from the vertex shader interpolated by the rasterizer. Create a varying float depth variable in both vertex and fragment shaders, set to the eye-space depth -esVert.z in the vertex shader. This value will be interpolated for each rasterized fragment.

- In the fragment shader, set the output colour to represent depth. This could be done simply with

gl_FragColor.rgb = vec3(depth)or with a custom colour ramp, interpolating individual channels. GLSL offers a full range of maths functions and utilities - see the GLSL reference at the bottom of the page.

There are many ways to shade objects. A common method is to use pre-computed geometry normals for lighting calculations. This will be covered in next weeks tutorial.

Axes

Try adding a set of r,g,b axes. Hint: use gl_FragColor = gl_Color.